James Brown - Software Engineer

API's: OpenGL (Graphics), FMOD (Audio)

Languages Used: C++, GLSL

Primary Roles: Render Pipeline, Scenes, Game Objects, Shaders

Additional Roles: User Interface

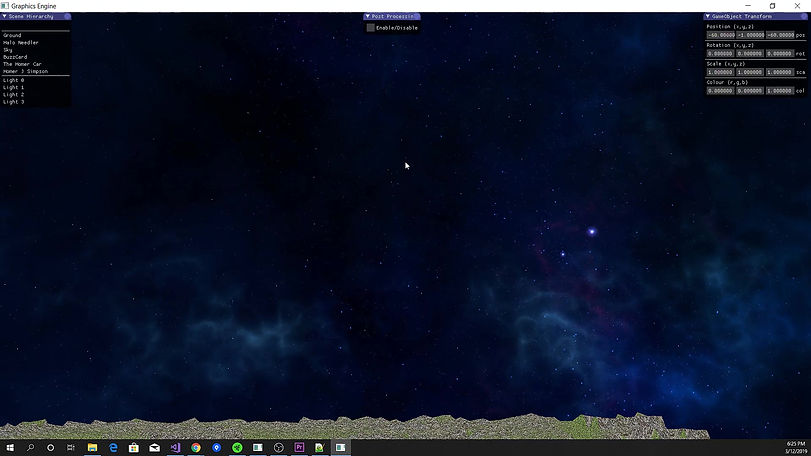

This is a custom game engine that utilizes OpenGL and FMOD. I initially developed it for a second year assignment while studying at the Academy of Interactive Entertainment, but have since expanded its capabilities in my own time. Only the basic render pipeline, lighting, game object and scene systems were developed for the initial assignment. I have since added Perlin Noise terrain generation, an editor UI interface that mimics Unity's, and an audio system through the FMOD audio API.

*Video Contains Audio

Mesh & Terrain System

The mesh class utilizes a data struct to store the necessary vertex data such as position, a normal and a texture coordinate while also having the functionality for creating meshes of primitive shapes such as cubes, quads, screen quads, and planes. It also has the ability of procedurally generating texture coordinates for meshes when they aren't provided. These primitive meshes are created by manually assigning the vertex data in their respective creation functions and utilizes an index buffer to reduce the amount of non-required and repetitive vertex data.

Additionally, this system can create a quad of any size (row by column) which is primarily utilized for generating terrain which is accomplished by creating a vector of vertices and int's (indexes for the index buffer) and does a double for loop while creating a quad each pass the same way as seen in the fixed size quad create function found on line seventy three, except it just applies an offset with the loop iterators. The texture coordinates are then generated by finding the min and max vertices of xz and then utilizes an absolute range (i.e. (vminx - vmax) * -1) and an offset (i.e. 0 - minx). Once all ranges and offsets are calculated , it is passed to the GenerateTexCoord function which calculates a vector two by applying the offsets to the current looked at vertex x and z position and then divides those values by the absolute ranges to return a coordinate between zero and one. The newly calculated texture coordinates are assigned to the current looked at vertex and then moves onto the initialize function following texture coordinate generation of all the vertices, to bind the vertex data to vertex array buffers. Like Unity, every mesh is attached to a game object which I go into more detail further down, however, after the terrain mesh is created and attached to a gameobject, the GenerateTerrain function is utilized to generate a red Perlin noise texture which is used to offset the vertices y position in the vertex shader.

Code Samples - Mesh & Terrain Generation

Following the creation of the terrain plane and noise texture, the texture is fed to a terrain shader which uses the red channel and a scalar to offset the vertex's y position in the vertex shader. While in the fragment shader, the noise texture and two terrain textures (in this example are grass and rock) are sampled and outputted based on scalar values. For instance, a scalar value is calculated for the backdrop where red isn't dominate and sets the out colour as the sampled grass texture multiplied by the backdrop scalar. The same applies for the rock texture except it multiplies the out colour by the red channels value. Additionally, the textures are tiled by multiplying the texture coordinates by forty in order to make it look more appealing and natural instead of one stretched out texture.

Code Samples - Terrain Texturing

Scene System & Lighting Shader

From my extensive use of Unity over the past three years and my recent forays into the Unreal Engine, I decided that I wanted to architect my systems to a similar extent which began with developing a scene system that stored all instances of created game objects and light sources. However, at the moment the scene is also responsible for handling lighting, post processing and shader binding but I am planning on soon refactoring them into their own classes that derive from a component base class, just like Unity's component system. Once these changes are made, the scene will essentially only store game objects and call their update and draw methods. This allows me to eventually be able to easily serialize and load scenes in the editor like any industry standard engine.

Continuing, the Oren-Nayar method of diffuse reflectance and Cook-Torrance method of specular reflectance are used to simulate physical based lighting from all created light sources in scene. This is accomplished from the lighting fragment shader which samples material textures such as diffuse, normal, specular and depth maps, material field values such as Ka, Kd, Ks and light field values such as Ia, Id, Is. When sampled, the shader loops through all the light sources and calculates the diffuse and specular colour's each pass using the Oren-Nayar and Cook-Torrence methods with the light source's colour, strength and attenuation (light source distance. diffuse only) being multiplied together and added onto the final out colour. Following the lighting calculation loop, the calculated ambient, diffuse and specular colours are added together and multiplied by their respecitve light and material field values (i.e. ambient += Ia * Ka * texDiffuse) and outputted as the final colour.

Code Samples - Scene & Lighting Shader

GameObject & Skybox & Camera System

Like Unity, the GameObject class acts as a data container for storing its transform, textures, mesh, a name and a tag with the addition of storing an audio source. Primarily the mesh represents the gameobject in scene and only the transform and mesh colour can be manipulated, however, soon I do plan taking the component approach by making a base class called Component that systems such as the audio source can derive from that can be added to, edited and removed from a gameobject's list of components. Additionally, the gameobject also is utilized to render the skybox around the editor camera which utilizes a primitive cube mesh that gets created on start up. The skybox's textures get loaded following the creation of the mesh through the CreateCubeMap function that takes in a vector of strings that contain six texture asset locations. The function uses glBindTexture that takes in GL_TEXTURE_CUBE_MAP to set its type. Following these bindings the faces are looped over and loaded into the glTexImage2D with GL_TEXTURE_CUBE_MAP_POSITIVE_X as its target type. Since the type we passed in is an enum type, I add the iterator value onto the enum each pass which which allows me to compactly assign all six sides of a cube with a texture. When drawing the mesh the vertex shader utilizes the position of the cube as texture coordinates as a cube map's positions is also a direction vector from the cubes origin. In the fragment shader a samplerCube texture is sampled and outputted as the colour. Additionally, the skybox gameobject position is always the same as the cameras, to prevent the camera from leaving the bounds of the cube.

Code Sample - GameObject & Skybox

Furthermore, I wanted the camera system to act and feel as intuitive as any typical game engine or 3D modeling program would with the W,A,S,D being utilized to reposition the camera, right mouse to rotate and shift to increase movement speed. The camera utilizes a projection matrix that gets calculated with the glm::perspective function. Additionally, a view matrix is utilized and calculated with the glm::lookAt function that takes in the cameras position, its position plus a forward directional vector and an up directional vector. These matrix's are utilized by every shader to render the geometry at the correct positions and orientations in screen space. Continuing, the camera's movement is simply accomplished by getting a directional vector (i.e. forward vector for W and S) and adding or subtracting it to its position while rotation is accomplished by adding mouse sensitivity to theta and phi (angles) and multiplying it by the mouse's input axis value take the angle last frame.

Code Samples - Camera System

Audio Engine

Like every high profile modern game engine developed today, I wanted to have an audio system that was capable of playing 2D and 3D audio that can loop, be played at any given event, control it's volume and be stopped at any point during run time. I accomplished this through using the FMOD audio api which i decided on because of its high usage among triple a game engines such as RAGE, Cryengine and the Forzatech engine, and also due to its incredible documentation by the developers and community. The Audio class is the system responsible for loading and playing audio files with the FMOD Studio api bindings and only contains one pointer reference to an implementation data struct that stores all the loaded audio data. The data struct utilizes maps to store sounds, channels, events and banks and also contains a reference to the FMOD Studio and FMOD core system that's responsible for the low level operations such as creating and playing sounds. When an audio source is created on a gameobject, an instance of the data struct is created while sounds, banks and events are loaded via their respective load functions that all take in a string to find them in their stored map's. For instance, to load an audio file the asset location has to be provided and its mode attributes has to be specified such as is it 3D and is it looping, then its fed to a fmod system binding called create sound that attempts to load and create the sound with the provided attributes and store it in the sound map. However, I've barley scratch the surface of this audio system and still have ways to go with it such as converting it into an attachable Component for gameobjects, possibly reverb zone effects and even possible editing fields at run time via the editor's inspector UI.

Code Sample - Audio Engine

*Video Contains Audio